Daniel Krivokuca

Welcome to my personal website! This is where I host all of my finished projects.

I'm a software engineer from Waterloo, Ontario with a bachelors degree of computer science and a passion for writing beautiful, robust software. I'm experienced in writing web applications, training machine learning models, and a passion in quantitative finance.

Want to contact me? Fill out the form below and I will be sure to get back to you!

My Skills

Data Mining

I love Building tools, crawlers, and collection systems for the purpose of acquiring and extracting insights from publicly available data. I've been writing data acquisition and mining tools for all of my personal projects and it's a really fun hobby of mine.

Web Development

I'm experienced in Javascript/Typescript and React (and Next.js). Building beautiful, performative web applications is an art I've cultivating for many years and I have a good grasp on web fundamentals and good UI/UX design. You can view some of my work below :)

Machine Learning

I have experience in training and deploying machine learning models with my go-to ML stack being Numpy for cleaning and loading data and PyTorch for building models and training them. I am currently learning more about Jax and transformers.

Backend Development

I'm comfortable writing high performance applications that run on the backend, such as my message broker written in Rust that powers my VPush project. Optimizing and debugging issues to make applications less fault-prone is something I aim to do in every project I work on.

Quantitative Finance

Because of the sheer amount of publicly available financial data, I find financial modelling and quantitative analysis to be a rewarding hobby of mine and have various projects related to this field. Finding relationships between companies and modelling their performance is very fun.

My Projects

These are some of the finished projects I've built over the years.

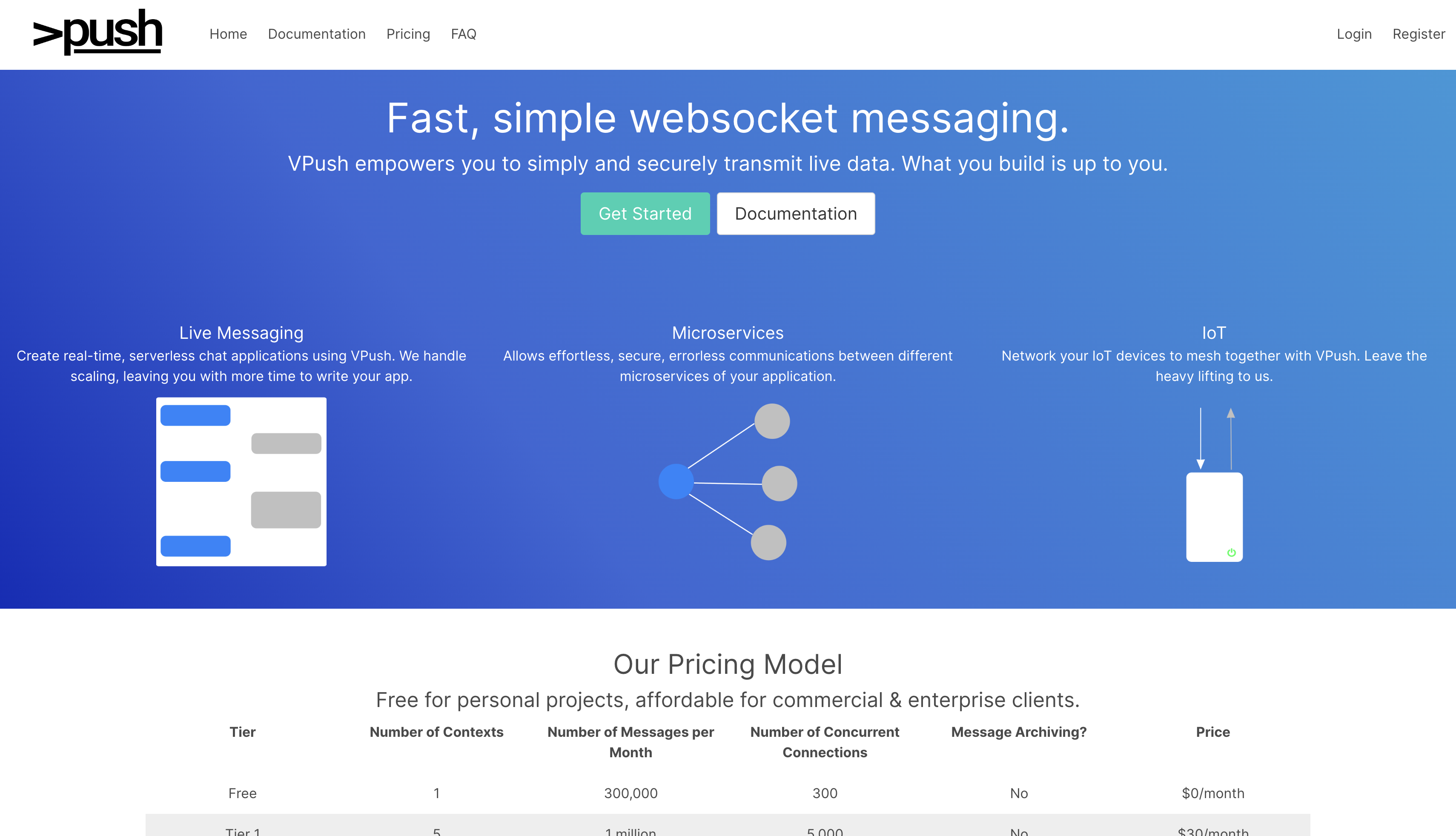

VPush

VPush is a websocket-based live messaging service that allows developers to develop real-time applications simply and seamlessly. VPush provides a websocket API for developers to connect to that can support a large amount of concurrent connections along with a high throughput of messages.

The backend message broker is written in Rust and is made to be infinitely scalable. The frontend is written in Next.js and Typescript, with the accounts & billing API being natively in Typescript while the websocket API is written in Rust utilizing the Warp cargo package.

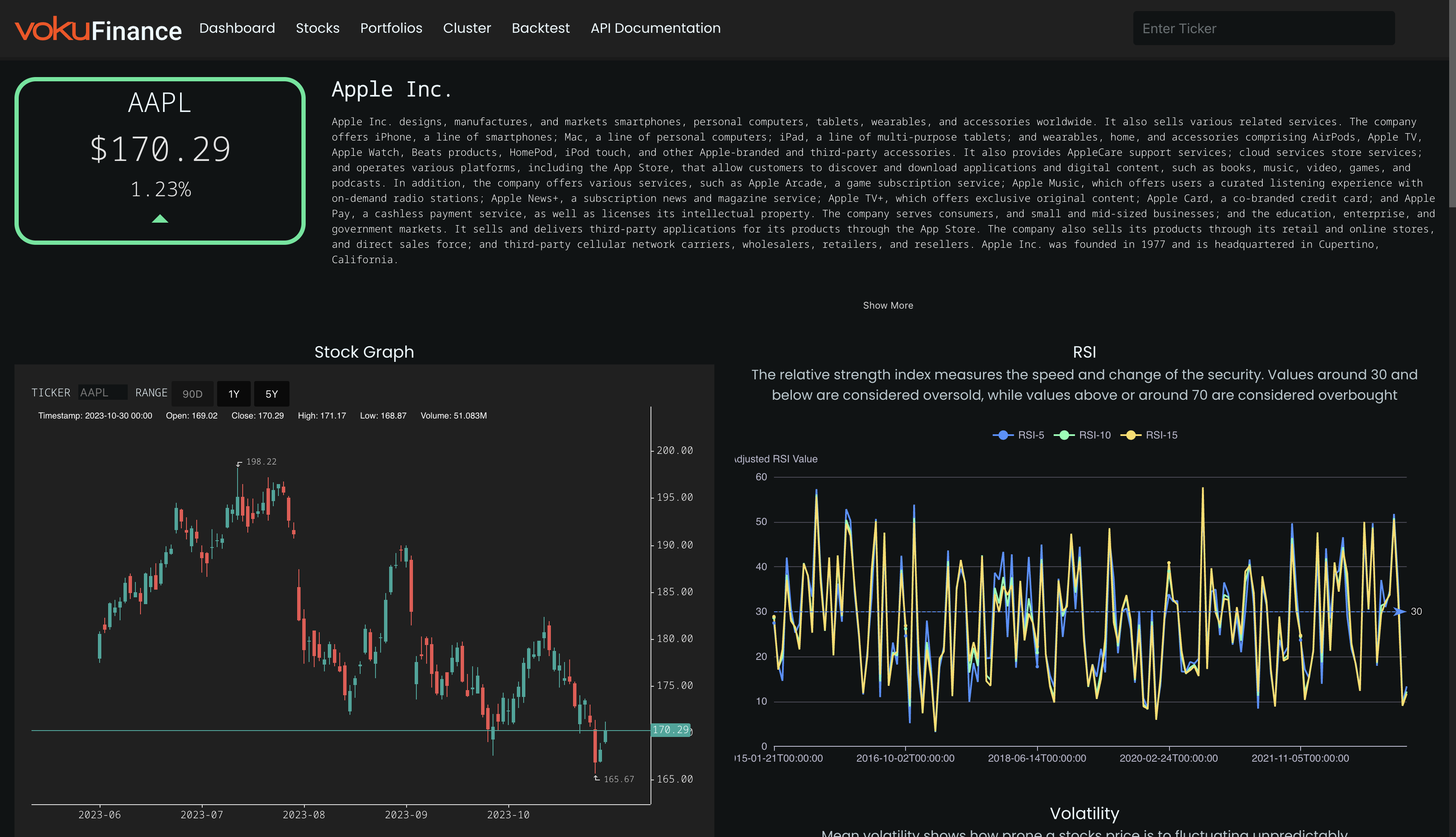

Voku Finance

Voku Finance is a financial data management system that allows users access to a free stock & ETF API to retrieve financial data about corporations & ETF's, a high-density clustering model that clusters 4,000+ publicly traded companies together based on 35 different features, and a data visualization tool to visualize and analyze a stocks performance.

The backend stock API, clustering model and data mining tools are written in Python, including FundFinder, a data acquisition tool that retrieves ETF data from Vanguard, iShares and State Street. Voku Finance is by far my most successful project with the API serving over 85,000 monthly requests.

Voku Financial News API

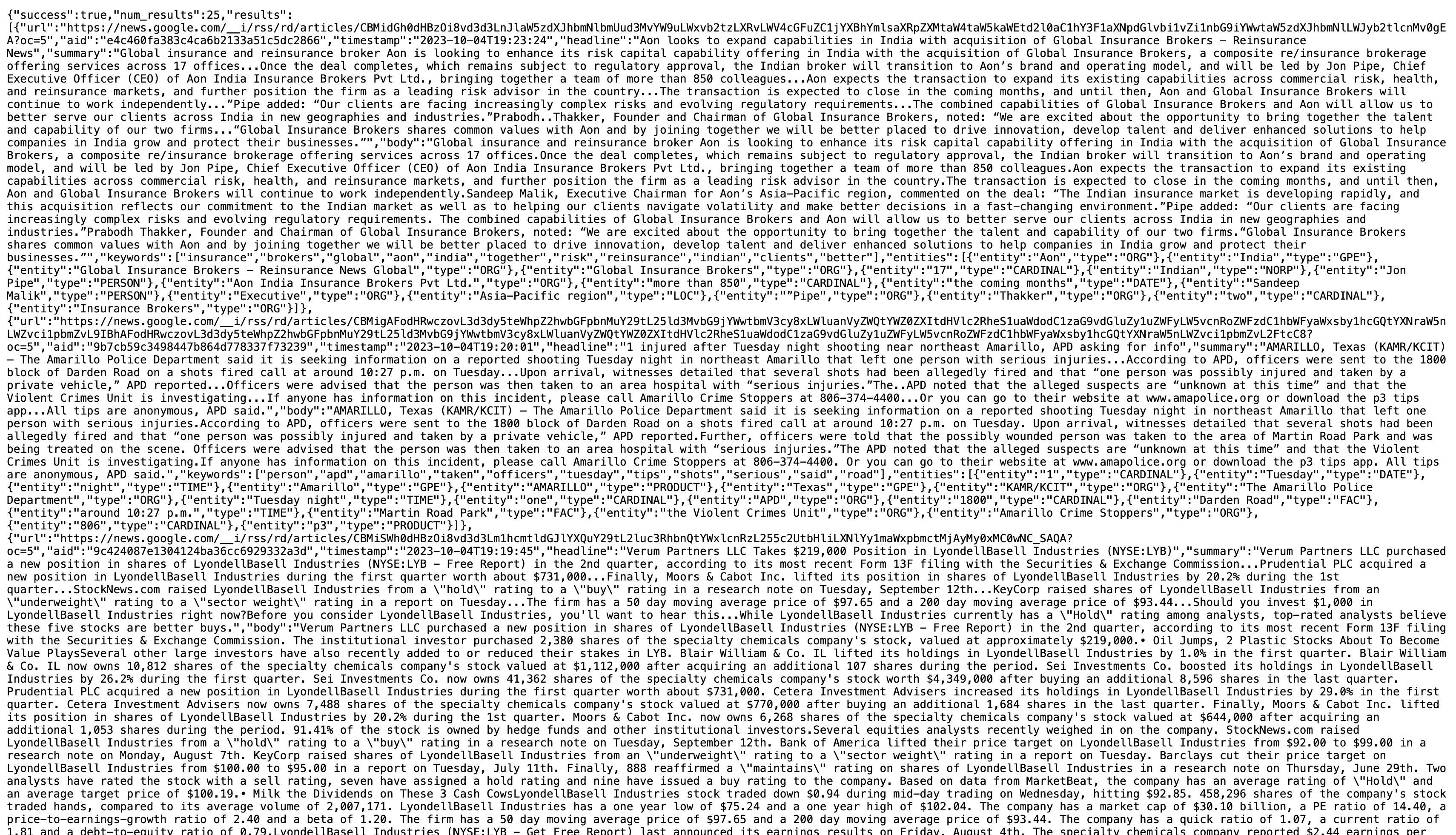

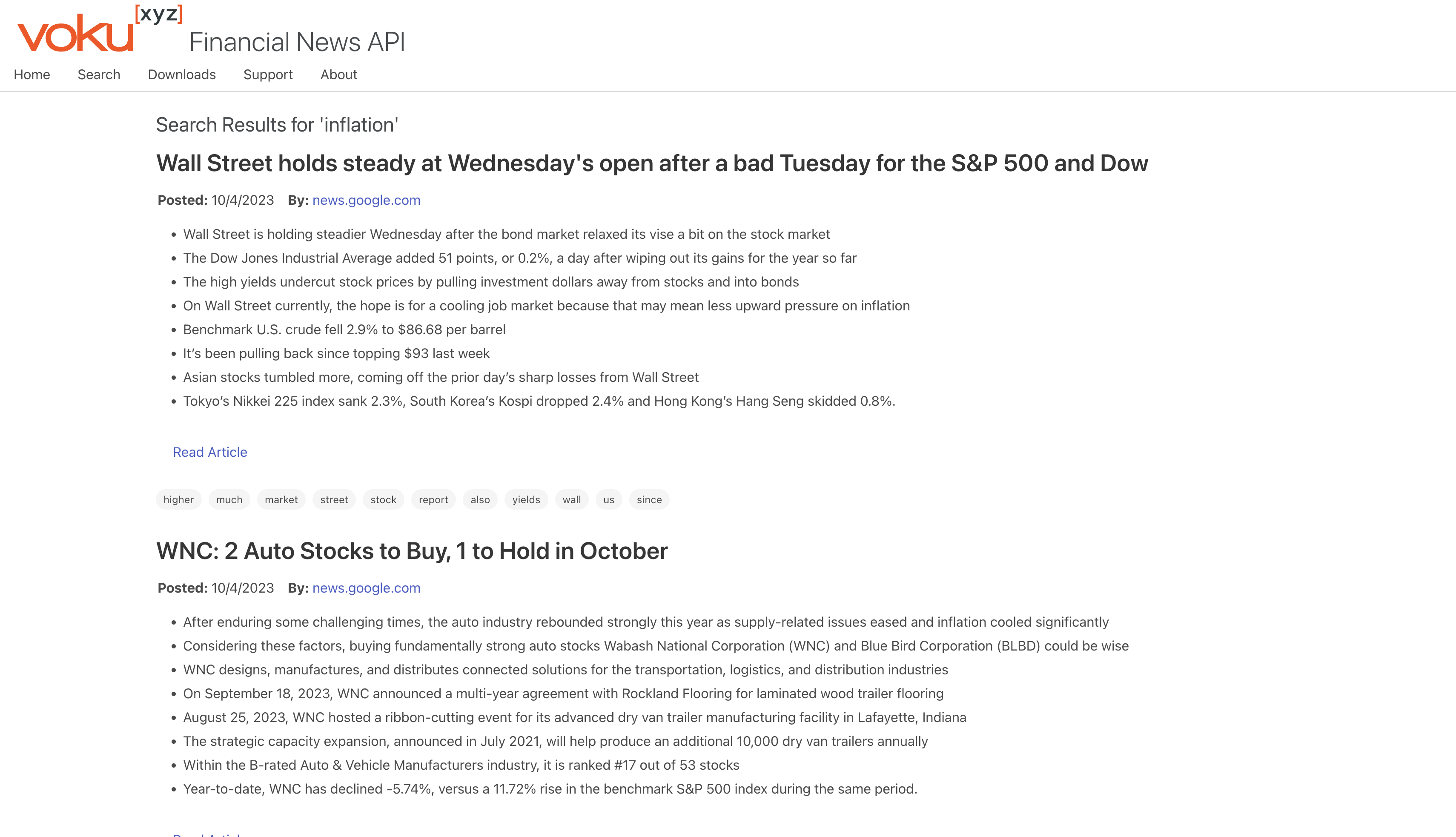

My financial news API and dataset is a public repository of more than 2+ million financial news articles that is continously updated. News is gathered from multiple high-quality sources and disseminated via a publicl available API and presented in a beautiful, concise format on the main website.

The frontend of the website is written in React (Next.js) and Typescript with the backend API service being written in Python and the entire site being indexed via Elasticsearch. Articles are first uploaded to a PostgreSQL database for longterm archiving and then are added to the main Elasticsearch cluster which allows them to be queried quickly and efficiently.

ArticleCrawler